The first article in this series presented the motivations and advantages of embedding a container image into a Linux embedded system and considered the challenges that this new paradigm brings up.

The second article presented different approaches to embed a container into the Yocto build system, with Docker engine installed on the target machine. A review of the second article is recommended before proceeding.

This article will cover into different approaches in more depth, showing some Yocto recipe examples and discussing the system design and architecture. This article is intended for people working to master Yocto and its system architecture.

We want to demonstrate these approaches with three different use cases:

- Embed one container image archive using Podman,

- Embed multiple image archives using Podman and orchestrate their execution using docker-compose,

- Embed one container store into the root filesystem using Docker.

Please note that the source code is available in the “meta-embedded-containers” on Savoir-faire Linux Github repository.

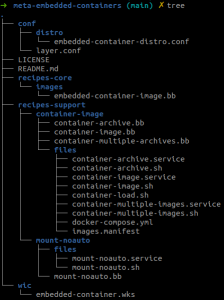

Meta-embedded-containers layer

Layout

The meta-embedded layer gathers all the components needed to build a simple read-only SquashFS root image.

Image recipe file

The “embedded-container-image.bb” image recipe is based on the “core-image-base.bb” recipe and installs the Docker engine and one of the container-images recipes.

It also will format the image file as a SquashFS read-only image.

require recipes-core/images/core/core-images-base.bb

DESCRIPTION = "Core image with embedded container images"

IMAGE_FSTYPES += "sqauashfs"

WKS_FILE = "embedded-container.wks"

IMAGE_FEATURES_append = " \

debug-tweaks \

post-install-loggin \

read-only-rootf \

ssh-server-dropbear \

"

IMAGE_INSTALL_append = " \

container-images \

docker \

"

Distribution configuration file

The “embedded-container-distro.conf” distribution file is based on Poky and adds a list of tools on the build host that should be allowed to be called within build tasks. This step is performed using “HOSTTOOLS_NONFATAL” BitBake variable. This is needed as Yocto does not provide native recipes of building such tools at the time this article was written.

The distribution file also specifies systemd as the init manager and adds the virtualization feature.

include conf/distro/poky.conf

DISTRO = "embedded-container-distro"

# Append poky based features

DISTRO_FEATURES_append = " virtualization"

DISTRO_FEATURES_append = " systemd"

# Add these binaries to the HOSTTOOLS_NONFATAL variable to allow them to

# be called from within the recipe build task. They are specified as

# NONFATAL as they are not installed at the same time on the build

# system.

HOSTTOOLS_NONFATAL += "sudo pidof dockerd podman newgidmap newuidmap"

# Use systemd as init manager

VIRTUAL-RUNTIME_init_manager = "systemd"

DISTRO_FEATURES_BACKFILL_CONSIDERED += "sysvinit"

VIRTUAL-RUNTIME_initscripts = ""

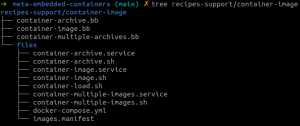

Container-image recipes

The “container-images” directory contains three recipes corresponding to the different approaches and use cases that will be detailed in the next sections. Future work could be regroup some common tasks and files in one class.

Image manifest file

The manifest file lists the Docker container image(s) to be installed on the target describing: the image name with its registry if any, its tag and its local archive name.

busybox 1.32.0 busybox_container

redis 6-alpine redis_container

httpd 2-alpine httpd_container

Embed container archive file(s) with Podman

Embedding one or more container archive file(s) requires the following steps:

Building

- Podman and uidmap tools have to be installed on the build machine.

In our case, build machine requirement is:

- Pull the Docker image specified in the “images.manifest” file.Podman will store the specified images in its Podman store (i.e. “${HOME}/.local/share/containers” directory).

do_pull_image() {

[ -f "${WORKDIR}/${MANIFEST}" ] || bbfatal "${MANIFEST} does not exist"

local name version

while read -r name version _; do

if ! PATH=/usr/bin:${PATH} podman pull "${name}:${version}"; then

bbfatal "Error pulling ${name}:${version}"

fi

done > "${WORKDIR}/${MANIFEST}"

}

- Tag the container images with their local name.

do_tag_image() {

[ -f "${WORKDIR}/${MANIFEST}" ] || bbfatal "${MANIFEST} does not exist"

local name version tag

while read -r name version tag _; do

if ! PATH=/usr/bin:${PATH} podman tag "${name}:${version}" "${tag}:${version}"; then

bbfatal "Error tagging ${name}:${version}"

fi

done > "${WORKDIR}/${MANIFEST}"

}

- Save the image(s) into a Docker tar archive file.

do_save_image() {

local name version archive tag

mkdir -p "${STORE_DIR}"

while read -r name version tag _; do

archive="${tag}-${version}.tar"

if [ -f "${WORKDIR}/${archive}" ]; then

bbnote "Removing the archive ${STORE_DIR}/${archive}"

rm "${WORKDIR}/${archive}"

fi

if ! PATH=/usr/bin:${PATH} podman save --storage-driver overlay "${tag}:${version}" \

-o "${WORKDIR}/${archive}"; then

bbfatal "Error saving ${tag} container"

fi

done > "${WORKDIR}/${MANIFEST}"

}

Podman can deal with the Docker image format and is able to handle several types of container archives including Docker type using the “podman save” command. The pulled Docker image(s) stored in the Podman’s store will be transformed into archive file(s) saved in the working directory of the recipe. The storage driver used by Podman is overlayfs in this example. We had issues with vfs storage driver when pulling container images with too many layers. Indeed, the vfs storage driver creates as many layers the container file declares adding recursively each previous layer and do not perform file system level deduplication taking excessive space on disk (see the reported issue).

There are some workarounds of this issue. The container engine run inside a container with the overlayfs storage driver, pulling the layers on an ext4 volume. This is only applicable if overlayfs is supported on the build system. Another workaround is to reduce the number of layers of the container image reducing the RUN commands or using “docker –squash” option.If the container image is simpler, then the vfs driver may be used instead.

- Store the archive into the root filesystem.

do_install() {

local name version archive tag

install -d "${D}${datadir}/container-images"

install -m 0400 "${WORKDIR}/${MANIFEST}" "${D}${datadir}/container-images/"

while read -r name version tag _; do

archive="${tag}-${version}.tar"

[ -f "${WORKDIR}/${archive}" ] || bbfatal "${archive} does not exist"

install -m 0400 "${WORKDIR}/${archive}" "${D}${datadir}/container-images/"

done > "${WORKDIR}/${MANIFEST}"

install -d "${D}${systemd_unitdir}/system"

install -m 0644 "${WORKDIR}/container-archive.service" "${D}${systemd_unitdir}/system"

install -d "${D}${bindir}"

install -m 0755 "${WORKDIR}/container-image.sh" "${D}${bindir}/container-image"

install -m 0755 "${WORKDIR}/container-load.sh" "${D}${bindir}/container-load"

}

The do_install task will install the systemd service that will load and run the Docker images and also the Docker archive files, and the manifest file.

Booting

The container image is loaded and started by a systemd service.

The “container-archive.service” systemd service requires Docker to be started and “/var/lib/docker” directory to be writable. It can be mounted in a volatile or a non-volatile storage. This means that the storage will be persistent after the first boot or not.

[Unit]

Description=Load and start container image at boot

After=mount-noauto.service docker.service

Requires=mount-noauto.service docker.service docker.socket

[Service]

Type=simple

RemainAfterExit=yes

ExecStartPre=/usr/bin/container-load start

ExecStart=/usr/bin/container-image start

ExecStop=/usr/bin/container-image stop

Restart=on-failure

[Install]

WantedBy=multi-user.target

The”container-archive.service” systemd service requires “mount-noauto.service” and “docker.service” to be started first:

- Mount-noauto.service: Bind mount /var/lib/docker directory on a writable filesystem.

mount bind -o /var/lib/docker writable_dir;

- Docker.service: Start Docker daemon.

Then three steps are described in “recipes-support/container-images/files/container-load.sh” and “recipes-support/container-images/files/container-image.sh” script:

- Container-load script: Load the image(s) if they are not already loaded. This operation can take some time to perform.

docker load -i container-archive-file.tar;

- Container-load script: Tag the image with the “latest” tag.

docker tag “localhost/${image}:${version}” "localhost/${image}:latest"

- Container-image script: Start the image.

docker run –name "${image}:latest" -d container_name;

Finally, we can see that the Docker images are running on the target.

Embed multiple container archives with Podman and docker-compose

Building

Embedding multiple container archives with docker-compose follows roughly the same process. It means that all the container archive files will be embedded in the root filesystem.

do_install() {

local name version archive tag

install -d "${D}${datadir}/container-images"

install -m 0400 "${WORKDIR}/${MANIFEST}" "${D}${datadir}/container-images/"

install -m 0400 "${WORKDIR}/docker-compose.yml" "${D}${datadir}/container-images/"

while read -r name version tag _; do

archive="${tag}-${version}.tar"

[ -f "${WORKDIR}/${archive}" ] || bbfatal "${archive} does not exist"

install -m 0400 "${WORKDIR}/${archive}" "${D}${datadir}/container-images/"

done > "${WORKDIR}/${MANIFEST}"

install -d "${D}${systemd_unitdir}/system"

install -m 0644 "${WORKDIR}/container-multiple-images.service" "${D}${systemd_unitdir}/system"

install -d "${D}${bindir}"

install -m 0755 "${WORKDIR}/container-multiple-images.sh" "${D}${bindir}/container-multiple-images"

install -m 0755 "${WORKDIR}/container-load.sh" "${D}${bindir}/container-load"

}

Unlike the previous method, a docker-compose configuration file has to be installed also on the target root filesystem.

version: '3.7'

services:

myservice:

image: localhost/busybox_container:latest

myredisservice:

image: localhost/redis_container:latest

myhttpdservice:

image: localhost/httpd_container:latest;

An example with “container-multiple-archives.bb” recipe is illustrated in the meta-embedded-containers repository.

Booting

The systemd service will load the archive files and then start the container swarm.

[Unit]

Description=Load and start multiple container images at boot with docker-compose

After=docker.service mount-noauto.service

Requires=docker.service docker.socket mount-noauto.service

[Service]

Type=simple

RemainAfterExit=yes

ExecStartPre=/usr/bin/container-load start

ExecStart=/usr/bin/container-multiple-images start

ExecStop=/usr/bin/container-multiple-images stop

Restart=on-failure

[Install]

WantedBy=multi-user.target

Mount-noauto.service: Bind mount /var/lib/docker directory on a writable filesystem.

mount bind -o /var/lib/docker writable_dir;

- Docker.service: Start Docker daemon.

- Container-load script: Load the image(s) if they are not already loaded.

docker load -i container-archive-file.tar;

- Container-load script: Tag the image with the “latest” tag.

docker tag “localhost/${image}:${version}” "localhost/${image}:latest"

- Container-multiple-images script : Start the images.

docker-compose -f docker-compose.yml up –d –force-recreate

The docker-compose configuration file will point to the local names of the loaded container images in the local Docker registry.

Embed container images with Docker

The major difference from previous approaches is that instead of embedding one or multiple container archive(s) into the root filesystem, the container image will be loaded during build time and stored in the Docker store.

This means that the /var/lib/docker directory of the target root filesystem will be populated during the build time with all the container data that Docker will need to run these images. This approach allows to save the loading time during the target boot which can take a noticeable amount of time depending of the size of the container images and introduces delays in the boot sequence that might not be desired.

Building

- The Docker daemon has to be installed on the build machine. The “USER” environment variable has to be set and has to belong to sudoers group. It is very important otherwise the Docker daemon will not be able to be started.

- Define an empty and arbitrary Docker store directory.

- Start the Docker daemon, pull the image and archive the Docker store.

do_pull_image() {

[ -f "${WORKDIR}/${MANIFEST}" ] || bbfatal "${MANIFEST} does not exist"

[ -n "$(pidof dockerd)" ] sudo kill "$(pidof dockerd)" sleep 5

[ -d "${DOCKER_STORE}" ] sudo rm -rf "${DOCKER_STORE}"/*

# Start the dockerd daemon with the driver vfs in order to store the

# container layers into vfs layers. The default storage is overlay

# but it will not work on the target system as /var/lib/docker is

# mounted as an overlay and overlay storage driver is not compatible

# with overlayfs.

sudo /usr/bin/dockerd --storage-driver vfs --data-root "${DOCKER_STORE}"

# Wait a little before pulling to let the daemon be ready.

sleep 5

if ! sudo docker info; then

bbfatal "Error launching docker daemon"

fi

local name version tag

while read -r name version tag _; do

if ! sudo docker pull "${name}:${version}"; then

bbfatal "Error pulling ${name}"

fi

done > "${WORKDIR}/${MANIFEST}"

sudo chown -R "${USER}" "${DOCKER_STORE}"

# Clean temporary folders in the docker store.

rm -rf "${DOCKER_STORE}/runtimes"

rm -rf "${DOCKER_STORE}/tmp"

# Kill dockerd daemon after use.

sudo kill "$(pidof dockerd)"

}

The Docker daemon is started in this build task to ensure that the daemon is up and newly started only for this task. The daemon is stopped after pulling the image(s). The Docker store directory is specified with “–data-root” option.

The vfs storage driver is used here because the Docker store will be mounted as an overlay filesystem on the target in that context. After pulling the container image, remove the runtimes/ and tmp/ directories in the Docker store, as they will be created during boot on Docker daemon startup.

Finally, kill the Docker daemon.

- Install the systemd service files and the Docker store archive in the target root filesystem.

do_install() {

install -d "${D}${systemd_unitdir}/system"

install -m 0644 "${WORKDIR}/container-image.service" "${D}${systemd_unitdir}/system/"

install -d "${D}${bindir}"

install -m 0755 "${WORKDIR}/container-image.sh" "${D}${bindir}/container-image"

install -d "${D}${datadir}/container-images"

install -m 0400 "${WORKDIR}/${MANIFEST}" "${D}${datadir}/container-images/"

install -d "${D}${localstatedir}/lib/docker"

cp -R "${DOCKER_STORE}"/* "${D}${localstatedir}/lib/docker/"

}

BitBake analyses all the files and binaries installed by a recipe during the do_package task.The package manager used by Yocto will list all the files installed in the recipe install directory and will check to ensure that each file matches a specific package. If not, the package manager will report all the missing dependencies and issue warnings (or errors) during packaging which is not desirable.

The QA step when packaging will also be skipped and the shared libraries resolver will be configured to exclude the entire package when scanning for shared libraries. This can be done by using the EXCLUDE_FROM_SHLIBS variable.

do_package_qa[noexec] = "1"

INSANE_SKIP_${PN}_append = "already-stripped"

EXCLUDE_FROM_SHLIBS = "1"

This is a turnaround. Although it works. It does not address the license compliance and content listing problem that Yocto is intended to solve. Anything installed in the docker image is not listed. This problem was mentioned in the first article and has not been resolved.

Booting

During boot time, the mechanisms are the same as previous approaches except that the /var/lib/docker directory has to be mounted as an overlayfs. All modification of this directory will go to the upper and writable layer.

Conclusion

This article shows three different approaches to embedding container images into Linux root filesystem with a focus on Yocto integration. The source code is available under a meta-embedded-container GitHub repository.

All three approaches considered are reasonable and valid but they don’t work in quite the same way. As a systems integrator, we consider all aspects of working with the build systems, for instance Yocto or Buildroot. Our customer’s needs determine our approach, bearing in mind all the challenges that each might pose.

This article closes the series of three articles on container usage in embedded systems. We welcome comments and discussions. Other aspects could be explored in future articles.

Is it possible to be done that approach “Populate the Docker store into the target system” in Yocto Thud ( Yocto 2.6) or it’s hard depends to Yocto Dunfell ?

Hi Dimitar !

I didn’t have tested with this Yocto’s version but it will be definitively possible. I don’t see any blockers to use with Yocto Thud :).

Good luck !