In the previous article, we talked about the motivations and upsides to embed a container image into a Linux embedded system as well as the challenges this paradigm brings up in a general way.

In this article, our perspective is focused on different approaches based on the Yocto build system with Docker engine, installed on the target machine. Unlike the approach used by Balena OS, which provides the entire ecosystem and tools to run and manage one or multiple containers, we want to discuss the different technical approaches regarding the target system focusing on a lightweight and minimal solution. As a lightweight solution, we prefer to provide only the minimal requirements in the Yocto infrastructure designed as a Bitbake layer in order to pull and install the container into the root filesystem and run the Docker image in the target system. The technical implementation of the Bitbake layer will be detailed on the next article.

Following this discussion, we want to focus more on the technical aspects regarding some of the challenges described in the previous article, such as the Yocto integration of the Docker container image(s) and improving the operating system reliability due to corruption issues.

First, we will provide further insight into our technical build and target environment, then compare our different approaches and options to handle and integrate a Docker container image in Yocto’s build system. Finally, the differences between integrating Podman or Docker in the Yocto build system will be addressed.

Running a Docker container in an embedded system

As said earlier, the final goal is to run one or multiple Docker images in the target embedded system.

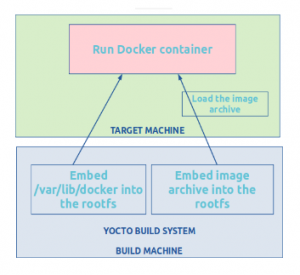

We have implemented two approaches within the Yocto build system:

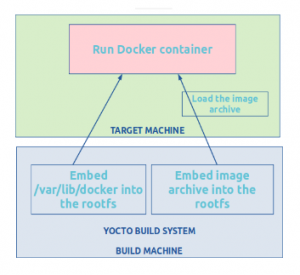

- Embed the Docker image archive into the root filesystem, OR

- Embed the docker store into the root filesystem, which implies populating the /var/lib/docker directory during build time.

To achieve this goal, we need to detail all the constraints brought by the target and the build system requirements. Those are not mandatory but important to be described and listed as an example as they have driven our technical approaches.

Target requirements

The target system will not have connectivity access during its lifetime, so the Docker image has to be deployed during the provisioning step. This means that we can not simply do a “docker pull <image>” command during the boot.

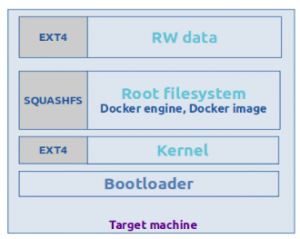

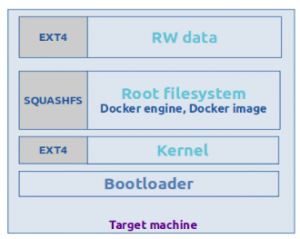

- Read-only root filesystem

The root filesystem is a SquashFS filesystem and is mounted as read-only. In the previous article, data corruption was listed as one of the challenges in the embedded systems. System reliability is one of our main concerns hence writing operations in the root filesystem are avoided by mounting a read-only root filesystem.

- Docker store in a writable filesystem

The Docker store is the root directory of persistent Docker state (i.e. by default /var/lib/docker directory). In the target system, this directory can be stored in a volatile or non-volatile filesystem (in a tmpfs filesystem or writable partition), this means that loading the archive will be performed at each boot or not (depending if the Docker store has to be permanent or not).

- Docker as container engine installed on the target

This image above is an example of a target system made of four partitions.

Build system

The build system used in this case is Yocto. The project statement reads, “The Yocto project is an open source collaboration project that helps developers create custom Linux-based OS for embedded products”.

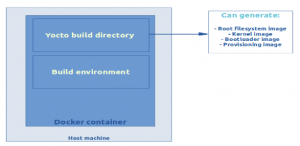

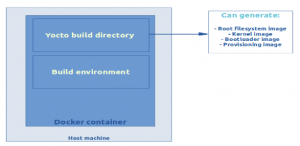

- Running the build system in a Docker container

During our development processes, Yocto has to be built by our developers and our CI (Jenkins). In order to avoid compatibility issues and meet the dependency requirements on every machine, a Docker container is used to build the target artefacts.

We use the cqfd tool which provides a convenient way to run commands in the current Yocto build directory, but within a Docker container.

Yocto will generate a SquashFS root filesystem, a kernel image, a bootloader image and a provisioning ISO image which will install all the previous artifacts into the target machine.

It is important to underline that the problem we are trying to address is the same with an other build system (Buildroot, OpenWRT). Here, the Yocto build system is not a limiting factor and is only taken as an example.

To meet the requirements listed above for both the target embedded system and the build system, we have figured out two approaches keeping in mind that the final goal is to run a Docker image in the target embedded system.

Embed Docker archive in the root filesystem

The first approach here is to install a Docker archive in the root filesystem then to load the archive and start the image during boot.

Indeed, Docker provides a way to save one or more images to a tar archive through the docker save command. This command is mostly useful to create container image backup that can be then used with the docker load command.

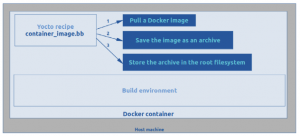

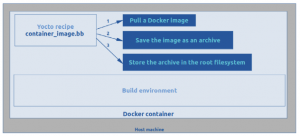

The idea is to create a recipe in Yocto which will:

- pull the Docker image that will be embedded in the target system,

- save this image as a docker-container archive,

- store this archive in the root filesystem.

The container engine will be used to perform the first two tasks.

At boot time:

- Mount the Docker store in a writable partition.

- Launch the Docker daemon.

- Load the docker archive file in the Docker store with the `docker load` command.

- Finally, run the Docker image.

There is a possibility either to mount the Docker store in a volatile or a non-volatile writable partition:

Each boot will perform the loading operation but it guarantees the image integrity at each boot.

- In a non-volatile filesystem

Only the first boot will perform the loading operation and the other boot will be faster. However, it does not ensure continuous runtime integrity. If the writable storage is purged, the original image can be reloaded again nonetheless.

Populate the Docker store into the target system

Instead of embedding the container archive into the root filesystem, another solution is to embed the entire Docker store in the root filesystem, which means populating the /var/lib/docker directory at build time using Yocto. Docker is the container engine used to pull the Docker image integrating a Docker-in-Docker solution.

The Docker store contains many information such as data for containers layers, volumes, builds, networks and clusters. During build time, the Docker store will be embedded into the target root filesystem. This approach has the main advantage to minimize the impact on boot time by discarding the container archive loading time (which can take several minutes depending on the image size), which allows the Docker daemon to run the container image directly.

Another significant advantage of this approach is to reduce writing operations in the persistent partition and therefore reduce the risk of data corruption. Checking the integrity of the SquashFS root partition will also by extension verify the Docker image integrity, which means the exact same Docker image is run at each boot.

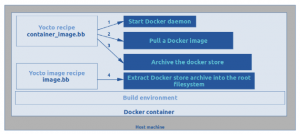

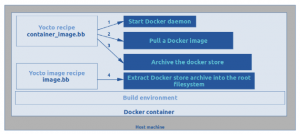

The main idea is to add a Yocto recipe and commands which will:

- Start the Docker daemon with a controlled and arbitrary Docker store repository.

- Pull the Docker image which will be embedded in the target system.

- Stop the Docker daemon.

- Archive the Docker store.

- Extract the docker store archive into the /var/lib/docker directory of the root filesystem.

To keep it simple, for each recipe, Yocto keeps several directories for instance “build”, “installation”, “packaging” and “deploy” directories. Yocto’s build tool (Bitbake) will analyze all the files and binaries installed by each recipe. For each file located in the installation directory (binaries, libraries), Bitbake wants to link those files to an existing Yocto’s package.

As a result, this will add package dependencies between the Docker image recipe and the content of the Docker container. Meanwhile, we want to separate as much as possible the content of the container image from the Yocto recipe.

As a workaround in Yocto, the choice has been made to decouple the installation of the Docker store into the image recipe from the container recipe. In the image recipe, the archive of /var/lib/docker directory will be extracted in the /var/lib/docker of the target root filesystem. This point is discussed more in detail in the next article.

At boot time:

- Mount the Docker store as an overlayfs on a writable partition.

- Launch the Docker daemon.

- Run the container image.

Using a container engine in a Docker container

In the previous sections, we have detailed both approaches to embed a Docker image in the target root filesystem. I would now like to focus on the container engines that can be executed in a Docker container. Please note that this discussion is important here because we have made the choice to use a Docker container as environment for our Yocto build system and we must account for the impacts of having to execute a container engine inside a Docker container.

Many container engines exist but we want one able to deal with Docker image format. Podman and Docker are able to handle several types of container archives including Docker type archive using `podman save` or `docker save` commands.

So, we will consider two viable and working solutions: Podman-in-Docker and Docker-in-Docker.

Docker and Podman recipes already exist in Yocto build system in the meta-virtualization layer, but they are not already packaged in Yocto as native tools. This means that the chosen container engine has to be installed on the build system. For our use case, this implies that Podman or Docker will be installed in a Docker container.

Both Podman-in-Docker or Docker-in-Docker solutions allow us to achieve our goal but bring up some considerations to take into account, such as:

The Docker container is run with -privileged flag and also run a daemon that has the root privileges.

When you run Podman-in-Docker or Docker-in-Docker, the outer Docker runs on top of a normal filesystem (ext4, overlayfs) but the inner docker engine runs on top of a copy-on-write system (AUFS, VFS, ZFS, overlayfs) depending on how the inner container engine is setup. However, there are many combinations that will not work as overlayfs over overlayfs because nesting filesystems that offer unified views is not supported by Linux. There are workarounds for those issues but the setup is not entirely straightforward.

In the case of Docker-in-Docker, both inner and outer Docker need to have separate /var/lib/docker directories. It is a bad idea to share the same docker store among two Docker daemons instances because both daemons may want to simultaneously access the same resources causing data corruption.

Here is an array that sums up some pros and cons of both solutions.

| Container engine | Advantages | Disadvantages |

| Podman-in-Docker | – standalone process – can handle docker-container archive – use of overlay storage driver on large container images (the overlayfs driver is used on a mounted ext4 volume). | – we had problems running Podman-in-Docker with newer versions than 1.5.1 – sudo privileges to clean podman store |

| Docker-in-Docker | – no need to convert the container image – use of vfs storage driver but overlayfs storage driver can be used on a mounted ext4 volume. | – start the daemon process before pulling the image – sudo privileges to run Docker daemon and to clean the Docker store |

Conclusion

Two possibilities have been considered:

| Solutions | Advantages | Downsides | Container engine |

| Integrating Docker image archive in the root filesystem | – easier to track the pulled image – easier integration in Yocto | – longer boot time adding the loading time of the container image. The boot time depends if the container store is stored in a volatile or a non-volatile storage. | Docker-in-Docker Podman-in-Docker |

| Integrating Docker store in the root filesystem | – faster boot time – ensure the image integrity included in the root filesystem during boot and runtime – minimize writing operation in the writable partition. | – start Docker daemon during build time before pulling the image. – decouple the Docker store extraction from the container recipe. | Docker-in-Docker |

Both techniques are equivalent in the end goal but do not have the same priorities depending on the target system requirements. Integrating a Docker image archive focuses on ease of integration at the expense of a greater boot time. This approach will also give the choice to use either Docker-in-Docker or Podman-in-Docker solution.

Conversely, integrating the Docker store focuses on the root filesystem image integrity during the target boot and runtime and also a faster boot time. However, Docker-in-Docker solution is more difficult and less easy to integrate in the Yocto build system.

In the next article, we will focus on different examples of Yocto recipes implementing these different possibilities.

Excellent article – thanks for sharing. On my Embedded Linux project, I have implemented what you call “Embed Docker archive in the root filesystem” in my Yocto Build. The device I am using is a smaller class processor and so my very first boot-up time is quite slow. I have not figured out how to implement “Populate the Docker store into the target system” with Yocto. I look forward to hearing how you implemented that. Thanks again!

Hi Phil,

thanks for your feedback. The third article will be released soon as the Yocto meta layer which will implement both approaches. Besides, if you are interested, I will present this subject during the Yocto Dev Day (29-30th of october 2020).

Regards !

Bonjour Sandra,

super série d’articles très intéressant ! Aujourd’hui, je n’utilise que des “chroot” sur mon yocto mais je comptais passer sur docker/podman et ces articles seront très utiles pour voir les possibilités d’intégration de containers 🙂

Merci !

Is it possible to be done that approach “Populate the Docker store into the target system” in Yocto Thud ( Yocto 2.6) or it’s hard depends to Yocto Dunfell ?